Real Time - Analytics with Azure Databricks:

This beginner-friendly article explores Azure Databricks in IIoT, detailing its role in enhancing data analytics from ingestion to data visualization, machine learning. It includes a practical case study on wind turbine optimization, showcasing how Azure Databricks addresses key challenges in modern data analytics

Real Time - Analytics with Azure Databricks:

Introduction

In the fast-evolving world of data analytics, Microsoft Azure has emerged as a key player. Azure Databricks, a vital component of Azure’s suite, revolutionizes how businesses approach data ingestion, processing, and machine learning (ML). This guide introduces Azure Databricks, explaining its role in transforming traditional data analysis into cutting-edge business solutions.

The Evolution from Traditional to Modern Analytics

Traditional data analytics, reliant on simple databases and historical data, falls short in today’s dynamic business environment. Azure Databricks addresses this gap by enabling advanced analytics that drive innovation and growth.

Key Challenges and Azure Databricks Solutions

-

Simplifying Complex Architectures:

- Challenge: Complexity in integrating various cloud services for analytics and ML.

- Azure Databricks Solution: Offers a unified architecture combining a shared data lake, open storage format, and optimized compute service for all data workloads.

-

Boosting Performance and Reducing Costs:

- Challenge: Need for scalable performance amidst increasing data volume.

- Azure Databricks Solution: Merges data warehouse performance with data lake economics, enabling real-time data processing and insights.

-

Enhancing Data Team Collaboration:

- Challenge: Silos and inefficiencies from using separate data engineering and science tools.

- Azure Databricks Solution: Integrates data science and ML tools, fostering a collaborative environment and a unified data approach.

Core Features of Azure Databricks

- Simplicity: Unifies analytics, data science, and ML, streamlining data architecture.

- Openness: Supports open source, standards, and frameworks, ensuring compatibility and future-readiness.

- Collaboration: Facilitates joint efforts among data engineers, scientists, and analysts using shared tools and data lakes.

Real World Use Case of Azure Databricks: Wind Turbine Optimization:

The Architecture:

-

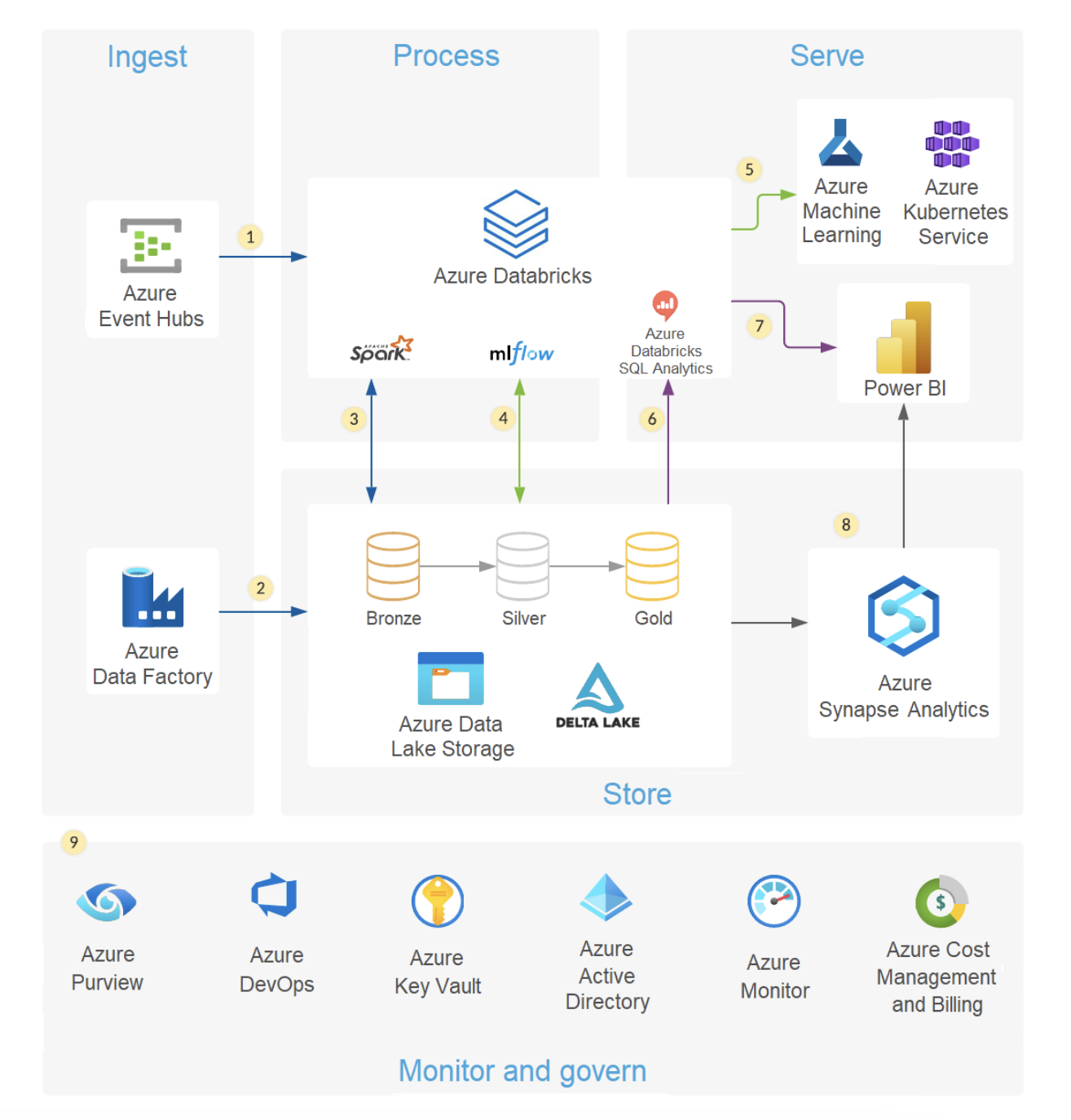

Ingesting Data: Azure Event Hubs The journey begins with data ingestion. Azure Databricks efficiently handles streaming data through Azure Event Hubs. It’s akin to a digital funnel, gathering real-time data from various sources.

-

Ingesting Data: Azure Data Factory Concurrently, batch data - large, accumulated datasets - are ingested from Azure Data Factory into Azure Data Lake Storage. This dual approach ensures that all types of data, whether flowing in a steady stream or in large, periodic waves, are captured for analysis.

-

Combining and Refining Data Once ingested, the data, whether streaming or batch, structured or unstructured, finds a common meeting ground in Azure Databricks. Using the medallion model with Delta Lake on Azure Data Lake Storage, it combines and refines this data. This process is crucial as it transforms raw data into a format that is ready for deeper analysis and machine learning.

-

Data Science and Machine Learning Here’s where the magic happens. Data scientists dive into this well-organized data pool using managed MLflow in Azure Databricks. They engage in data preparation, exploration, and model training. The platform is versatile, supporting various languages like SQL, Python, R, and Scala, and integrating popular libraries like Koalas, pandas, and scikit-learn. This flexibility allows for a tailored approach to model development, optimizing both performance and cost.

-

Model Deployment and Serving Once the models are ready, they can be served directly within Azure Databricks for different applications like batch processing, streaming, or even via REST APIs. There’s also the option to deploy these models to Azure Machine Learning web services or Azure Kubernetes Service (AKS), offering flexibility in how the models are utilized.

-

SQL Analytics For users who prefer SQL, Azure Databricks offers SQL Analytics. This feature not only allows for ad hoc SQL queries on the data lake but also enhances the experience with tools like a query editor, history tracking, and dashboarding.

-

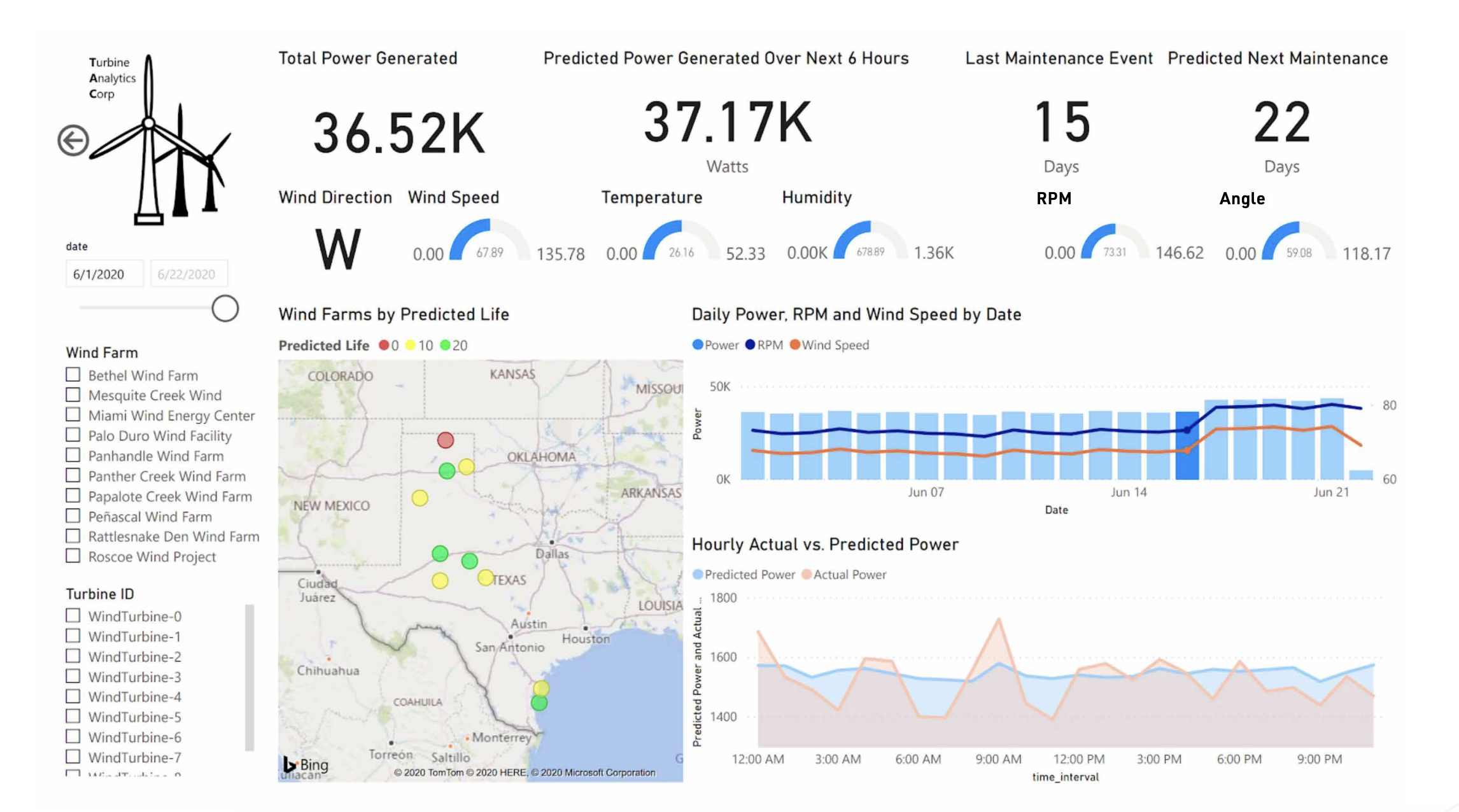

Power BI Integration Furthermore, integration with Power BI through a native connector enables sophisticated reporting and dashboard creation.

-

Expanding to Azure Synapse for Data Warehousing Should there be a need for a data warehouse, Azure Databricks seamlessly interfaces with Azure Synapse. This allows for the transfer of refined, ‘Gold’ data sets from the data lake into Synapse, providing a robust environment for business analytics.

-

Leveraging Azure’s Ecosystem for Enhanced Collaboration and Security Use of tools like Azure Purview for data governance, Azure DevOps for CI/CD, Azure Key Vault for secure data management, Azure Active Directory for seamless access control, Azure Monitor for performance tracking, and Azure Cost Management for financial governance are all part of this integrated approach.

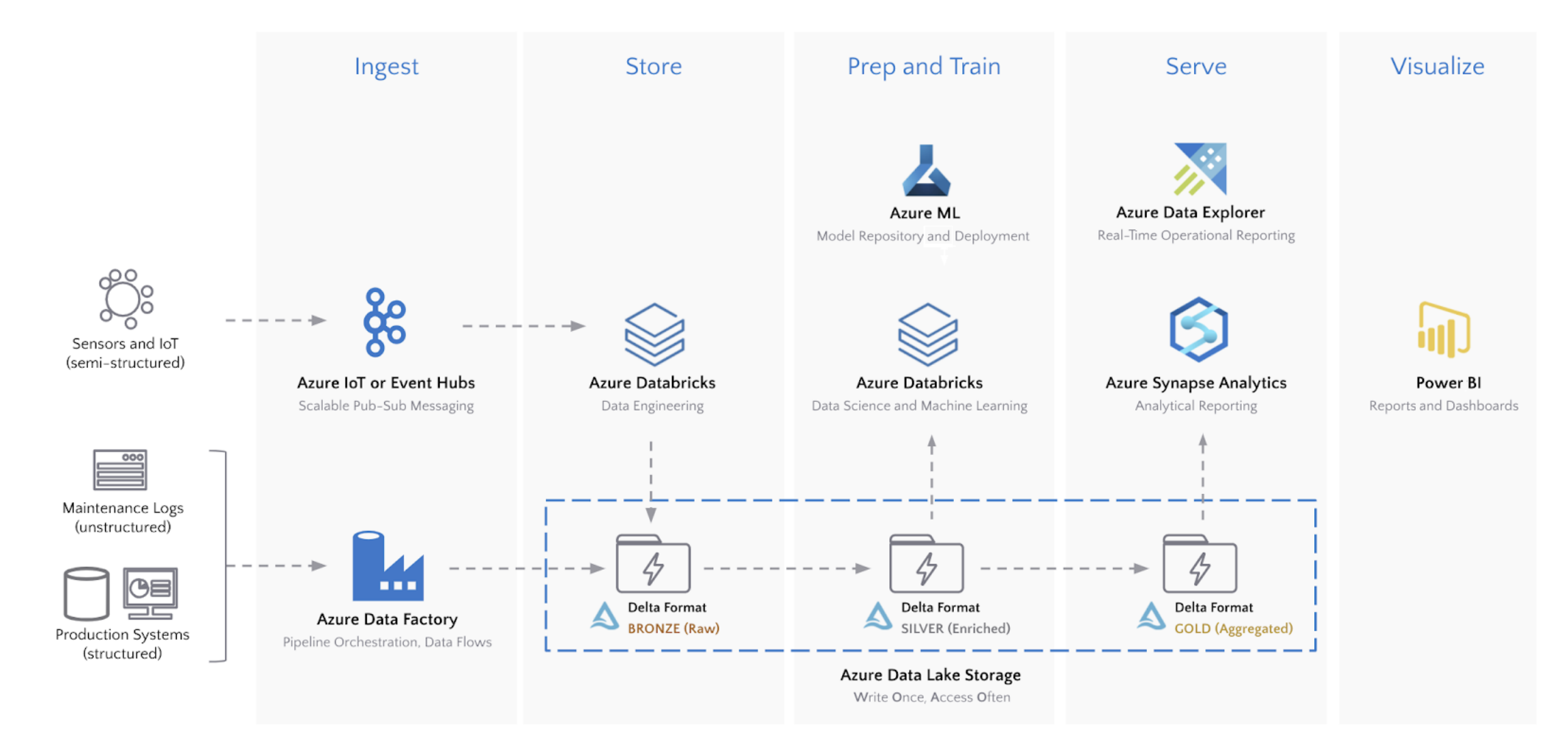

Databricks - Ingest, Store, Prep, Train, Serve, Visualize

Azure Data Lake Storage (ADLS):

ADLS is a key component that follows a write-once, access-often pattern, crucial for analytics in Azure. However, ADLS alone can’t effectively handle the challenges of time-series streaming data.

Delta Storage Format:

To complement ADLS, the Delta storage format provides a layer of resilience and performance. It stands out in several ways:

- Unified Batch and Streaming: It simplifies architecture by enabling ACID-compliant transactions.

- Schema Enforcement and Evolution: Ensures reliability and accommodates evolving IoT devices.

- Efficient Upserts: Supports in-line updates and merges, crucial for handling delayed IoT readings.

- File Compaction: Addresses the issue of numerous small files in streaming data.

- Multidimensional Clustering: Enhances filtering and joining capabilities, especially useful for time-series data.

Deployment and Data Flow:

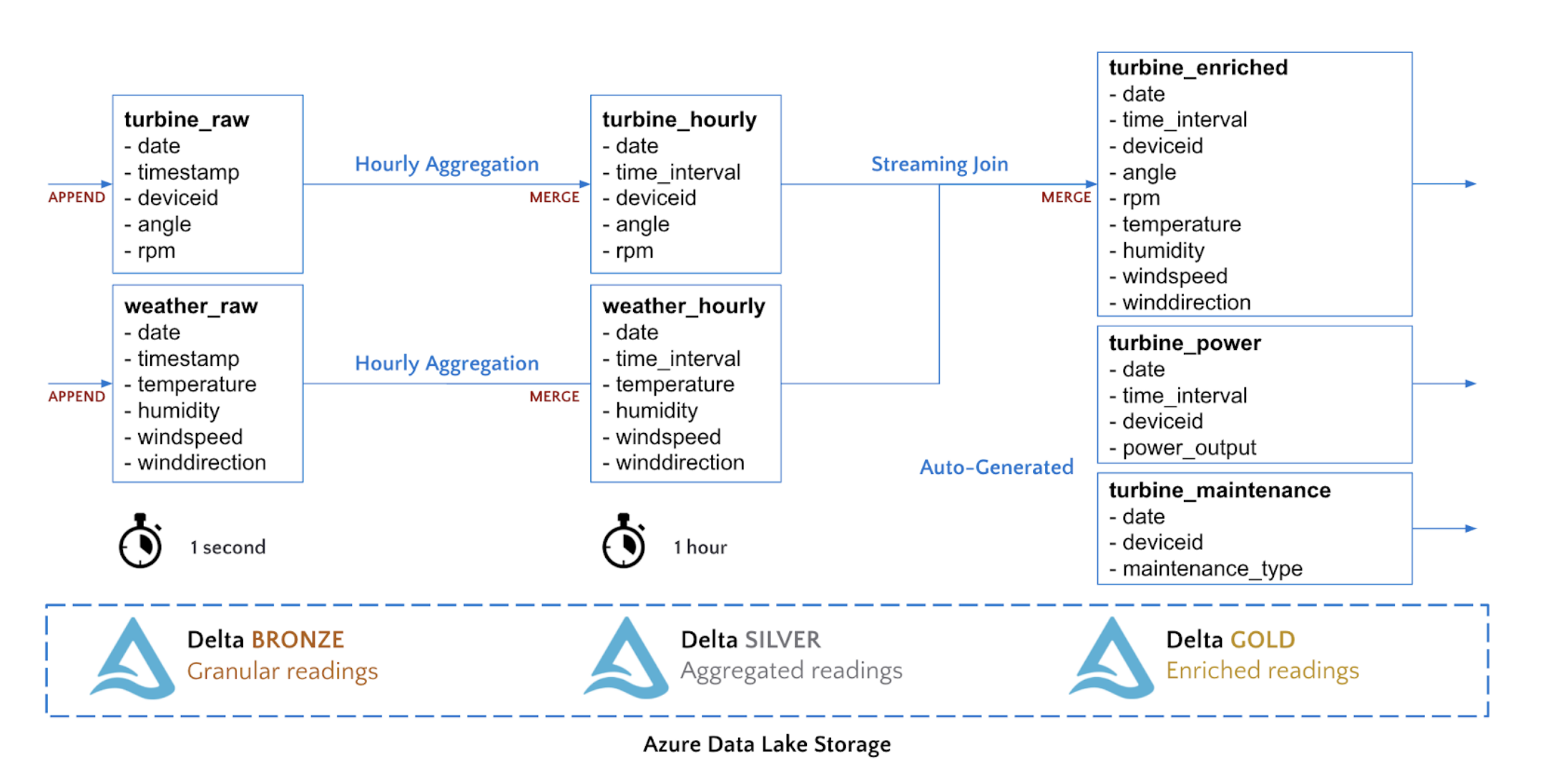

Data Ingest: Sensor readings from IoT devices, like wind speed and turbine RPM, are sent to Azure IoT Hub and then streamed into Databricks.

Data Storage and Processing: The data undergoes a multi-hop pipeline journey through Bronze, Silver, and Gold data levels, each representing stages of data refinement and aggregation.

The Bronze to Silver Journey: Here, data is aggregated into one-hour intervals using streaming MERGE commands.

The Silver to Gold Transition: This stage involves joining streams into a single table for hourly weather and turbine measurements.

Querying the Data: The enriched data in the Gold Delta table can be queried immediately, making it ready for AI applications and predictive models.

Analytics and Machine Learning

The enriched data now serves as the basis for advanced analytics and machine learning. In our case, the focus is on optimizing power output and the remaining life of wind turbines. This involves creating models to predict power generation based on operating conditions and estimating the remaining life of turbines. The goal is to balance revenue maximization from power generation against the costs incurred due to equipment strain.

Complete article on the Modern Analytics With Azure Databricks

Similar Real-World Use Cases of Azure Databricks:

- Data Processing and ETL (Extract, Transform, Load)

- Scenario: A retail company gathers large amounts of data from various sources like sales, customer feedback, and inventory.

- Application: Use Azure Databricks to clean, transform, and load this data into a structured format in Azure data storage solutions for further analysis.

- Real-Time Data Analytics

- Scenario: A streaming service wants to analyze viewing patterns in real-time to provide personalized recommendations.

- Application: Implement real-time data streaming and analysis using Azure Databricks to process data as it arrives, enabling instant insights and recommendations.

- Machine Learning and Predictive Analytics

- Scenario: A financial institution needs to predict loan default risk based on customer data.

- Application: Utilize Databricks’ machine learning capabilities to build and train models that predict loan defaults, enhancing decision-making.

- Business Intelligence and Reporting

- Scenario: A healthcare provider requires regular reports on patient care metrics.

- Application: Azure Databricks can process large datasets to provide insights, which can then be visualized using tools like Power BI for effective reporting.